Keep users in the loop.

Ship release notes that get read

Try Noticeable

An exciting discussion on the NANOG list has just focused on "gray" failures. What is a gray failure in computer networks?

Networks are not reliable, anyone who has ever seen a "Connection time out" message can attest to this. From a configuration error on the part of an operator to an excavator attack on a fiber, including a bug in routers, there is no shortage of causes of failure. These failures are typically binary: either all communications pass or none pass. Although they are not easy to prevent, or even to repair, these failures are trivial to detect: supervision squeals and users complain. But there are also "gray" failures. This is when it messes up but not in a binary way: the vast majority of the traffic goes through (and we can therefore not notice anything) but the gray failures strike a small minority of the packets, according to criteria that are not obvious to first sight.

For example, the network allows all packets to pass, except those that have this combination of options. Or it lets 99.99% of the packets pass but throws the others (except in the case of congestion, where it is normal to throw the packets). In the discussion on NANOG, an engineer cited a case he had encountered where a faulty line card was throwing all IPv6 packets where the 65th bit of the destination address was at 1 (which is apparently quite rare). The term has been used in contexts other than the network (e.g. this Microsoft study).

The discussion on the NANOG list was initiated in the context of a study by the Polytechnic Zurich, which began with a survey of operators to ask if they were handling gray issues and how.

First, a finding that is not very original but it is important to remember. Even outside of congestion, no network carries 100% of the packets. There are always problems, networks being physical objects, subject to the laws of physics and to various disturbances (such as cosmic rays). No machine is ideal, the electronics are not virtual and are therefore imperfect, the memory of a router does not necessarily keep intact what has been entrusted to it. And, no, FCS and ECC do not detect all problems.

As one observer noted:

From my long experience, I drew an analysis: in computer science there is between 1% and 2% of magic. This is not true, but it sometimes relieves to think that you may be the target of a voodoo spell or under the thumb of a good fairy.

If an operator sincerely claimed to have no gray failures, that would indicate no research rather than no issue. Closing your eyes allows you to sleep better :-)

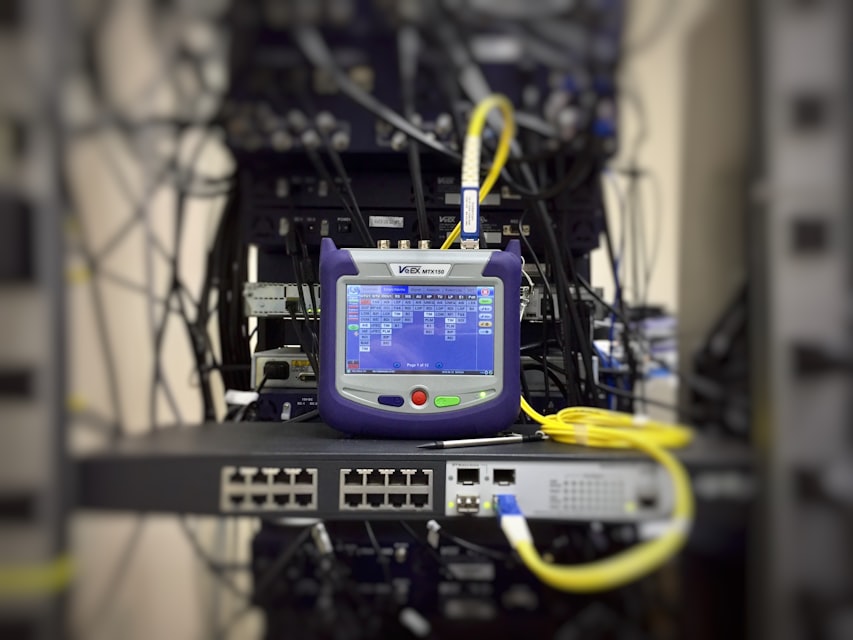

How to detect gray failures?

Gray failures are very difficult to detect. Suppose, like any good engineer, you have an automatic monitoring system that works, say, sending out Echo-style ICMP messages and waiting for a response. If you send three packets per test, and the network throws 1% of the packets (a very high number) at random, you only have a one in half a million chance of detecting the problem. Of course, we are not satisfied with this kind of testing. For example, we look at the counters attached to the interfaces of the routers and we see if the error counters are climbing. But if it is the electronics of the routers that are failing, the meters will not be reliable.

Gray failures are sometimes deterministic (as in the 65 bit example above) but not always, and if they are not reproducible the problem is even worse. Even if they are deterministic, the algorithm is not necessarily obvious. If the packets are routed inside a device according to complex criteria, and only one of the circuits is faulty, you will not necessarily find immediately why, sometimes, it passes and, sometimes, it does not. do not pass.

Is it serious for the operator? In the discussion on NANOG, one participant frankly said that gray issues are common but so difficult to deal with (e.g. because they often involve long and difficult negotiations with hardware vendors) that they are best ignored, unless a customer moaned. But, precisely, the customer does not always complain if the problem is rare, and difficult to point the finger with precision. And then modern systems have so many superimposed layers that the customer has the greatest difficulty in finding the source of a problem. So, in the case of an IPv6-specific problem, like the one mentioned above, the fact that the typical web browser automatically and quickly switches back to IPv4 does not help in the diagnosis (although it is certainly better for happiness). user).

To give an idea of the subtlety of gray issues, a NANOG participant quoted a case of a switch losing 0.00012% of packets, a number difficult to spot amid measurement errors. The problem was detected when the old tests (like the three ICMP messages mentioned above) were replaced by more violent tests.

Anyway, when you (well, your web browser) retrieved this article, maybe there was a gray failure that interfered with that retrieval. Maybe it has not even been detected and the text you are reading is not the right one...